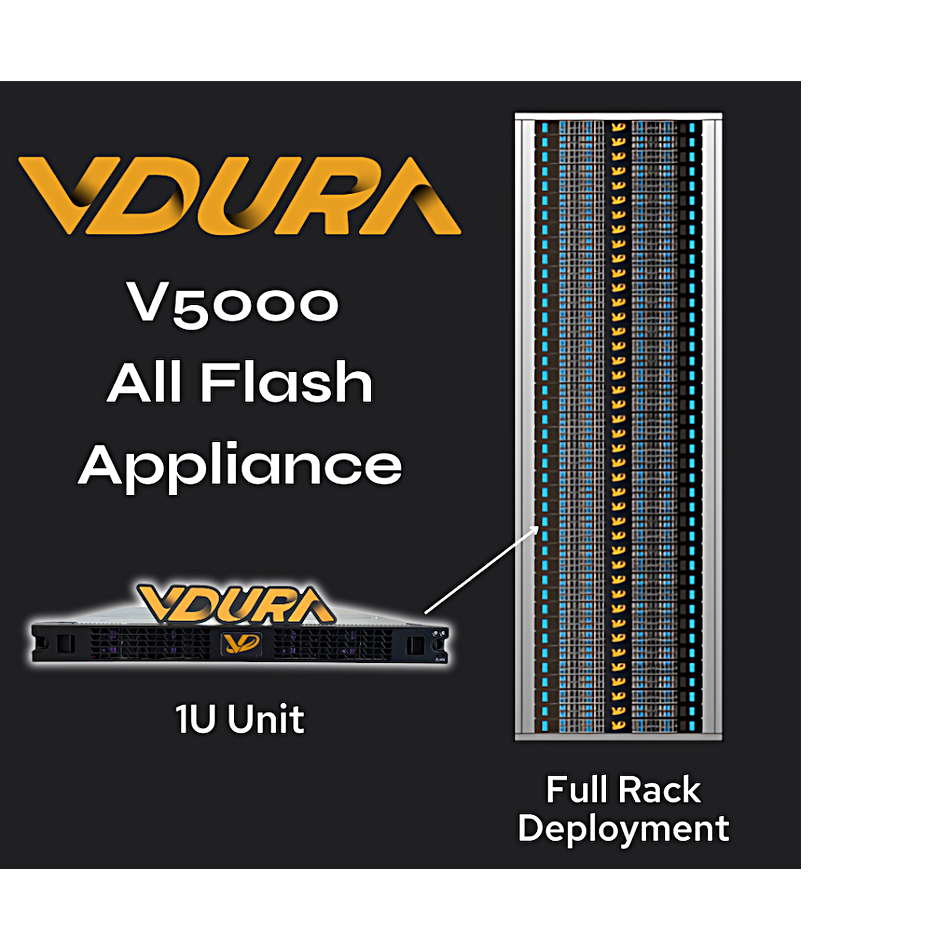

HPC and AI parallel file system storage supplier VDURA has added a high-capacity all-flash storage node to its V5000 hardware architecture platform.

The V5000 was introduced just over three months ago and featured central slim (1RU) director nodes controlling hybrid flash+disk storage nodes. These storage nodes consisted of a 1RU server with a 4RU JBOD. The overall system runs the VDURA Data Platform (VDP) v11 storage operating system with its PFS parallel file system. The new all-flash F Node is a 1RU server chassis containing up to 12 x 128 TB NVMe QLC SSDs providing 1.536 PB of raw capacity.

VDURA CEO Ken Claffey stated: “AI workloads demand sustained high performance and unwavering reliability. That’s why we’ve engineered the V5000 to not just hit top speeds, but to sustain them—even in the face of hardware failures.”

VDURA says “the system delivers GPU-saturating throughput while ensuring the durability and availability of data for 24x7x365 operating conditions.”

An F Node is powered by an MD EPYC 9005 Series CPU with 384GB of memory. There are NVIDIA ConnectX-7 Ethernet SmartNICs for low latency data transfer, plus three PCIe and one OCP Gen 5 slots for high-speed front-end and back-end expansion connectivity. An F-Node system can grow “from a few nodes to thousands with zero downtime.”

A combined V5000 system with all-flash F Nodes and hybrid flash+disk nodes delivers, VDURA says, a unified, high-performance data infrastructure supporting every stage of the AI pipeline from model training to inference and long-term retention. The VDP use of client-side erasure coding lightens the V5000’s compute overhead, with VDURA claiming VDP eliminates “bottlenecks caused by high-frequency checkpointing.”

The minimum F-Node configuration is three Director nodes and three Flash nodes. Both can be scaled independently to meet performance and/or capacity requirements. A 42U rack can accommodate three director nodes and 39 Flash nodes: 59.9 PB of raw capacity.

Customers can deploy a mix of V5000 Hybrid and All-Flash (F Node) storage within the same namespace or configure them separately as discrete namespaces, depending on their workload requirements.

Nvidia Cloud Partner Radium is implementing a V5000-based GPU cloud system with full-bandwidth data access for H100 and GH200 GPUs and modular scaling – it says this means storage can grow in sync with AI compute needs, “eliminating overprovisioning.”

The VDURA V5000 All-Flash Appliance is available now for customer evaluation and qualification, with early deployments underway in AI data centers. General availability is planned for later this year, with RDMA & GPU Direct optimizations also planned for rollout in 2025.