Comment: The storage industry is making across-the-board investments to support generative AI workloads, but where are the must-have apps or supplier moats to sustain this investment?

From vector database startups such as Pinecone and Weaviate, to RAG pipeline developers such as Komprise, to fast GPU data delivery from virtually all storage suppliers, it looks as if the entire storage industry has mobilized to support AI workloads, use GenAI models to manage storage better, and feed protected data to RAG LLMs for better response generation.

Apart from its new and niche role as a storage management tool, GenAI workloads are just another storage workload. The industry wants to support substantial workloads that need stored data, as it always has done, and GenAI LLMs used for training and inference are two such workloads with training having dominated so far. But inference is where it is supposed the continual and sustained data access will be done, centrally in datacenters, dispersed to edge devices, and accessed remotely in public clouds. Inferencing will be all-pervasive and taking place everywhere, witness views from IT industry execs such as Michael Dell and Marc Benioff, and suppliers such as VAST Data and DDN.

“AI is going to be everywhere,” Dell declared at the Dell World event in Las Vegas in May last year. Salesforce CEO Marc Benioff said at the Dreamforce conference in September 2024 that there will be a billion AI agents deployed across enterprises within a year. And yet generative AI makes mistakes, getting information wrong or imagining things – hallucinating.

An OpenAI LLM has miscounted the number of times the letter “r” occurs in the word “strawberry.” The AI incorrectly states that the word “strawberry” contains only two “r” characters, despite the user querying multiple times for confirmation. Part of the reason for this is that the LLM is not actually counting the Rs. It is trained to predict the next word or phrase in a sentence, given the context set by a user’s prompt.

Suppose an AI LLM/agent was a human assistant employee to whom you gave requests and it miscounted the number of letters in a word, the number of corners in a polyhedron – giving flat-out wrong answers. Would you employ them?

Obviously not. Even Microsoft boss Satya Nadella is doubting the overwhelming onrush of AI. Let’s suppose the AI is domain-specific and can transcribe conversations or translate foreign languages. Would you use that? Yes, you probably would. I use Rev to transcribe recorded interviews. It’s around $10 a month, cheap, fast, and good enough. Not perfect, but good enough, with the occasional replaying of the recording to understand a particular word.

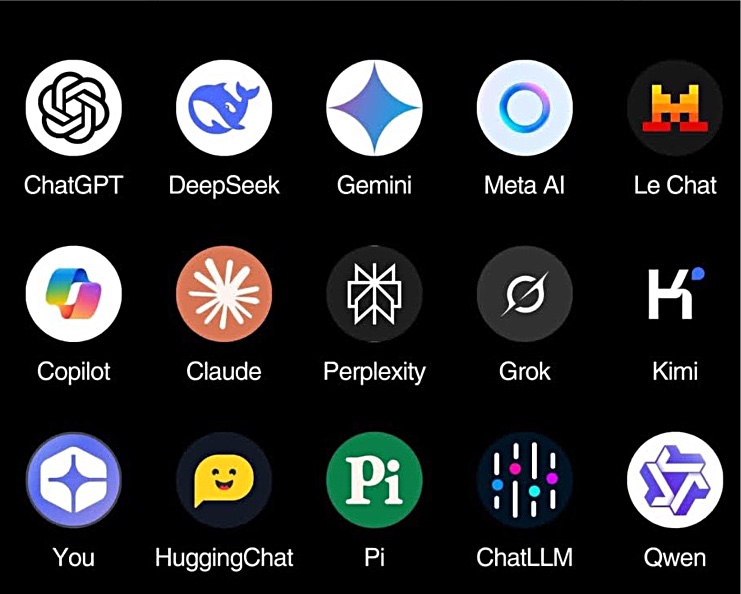

But Rev is not a chatbot, and neither is Otter. OpenAI’s ChatGPT is the chatbot that started the whole AI frenzy and now we have its various versions, Anthropic’s Claude, xAI’s Grok, DeepSeek, and more. Fifteen of them were shown in an xAI image:

From an ask-a-general-question point of view, none of the gigantic, multibillion-dollar AI startups, such as OpenAI, are profitable. None of them have yet produced a killer app that organizations must have and will pay lots of money for the privilege. They are the ChatGPT, Claude, Copilot, Perplexity, and Groks of our AI frontier, generalized search engines enabling us to sidestep the dross that is a Google query result with its four sponsored results hitting your screen before the proper results, which are diluted by SEO spam.

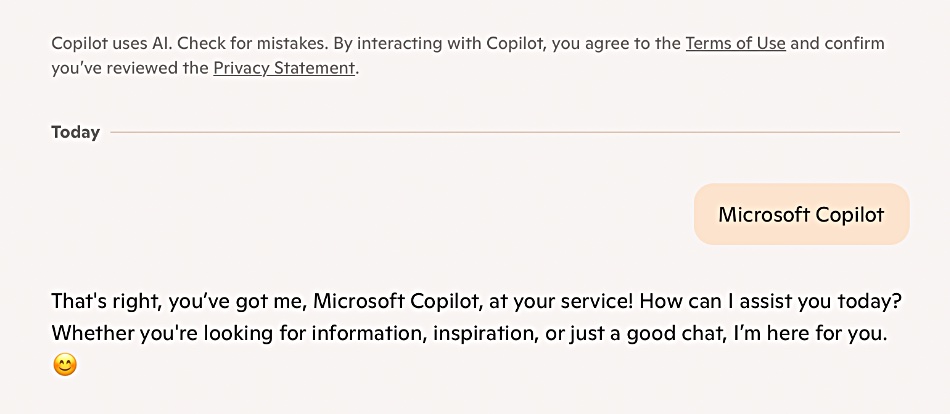

And then there is the Bing search engine with queries hijacked by Copilot, which tells you it can make mistakes and wants you to repeat your query that you gave to Bing in the first place.

How can you take AI chatbot software like this seriously? Just walk away.

Of course, it is very early days. If you pay attention to AI naysayers like PR exec Ed Zitron and his “There is no AI Revolution” shtick then you try to form a balanced view between AI chatbots and agents as a general human good on the one hand, and AI snake oil sellers backed by credulous VCs on the other – and find it’s hard. The extreme views are too far apart.

The AI agent revolution may happen, in which case lots of storage hardware and software will be needed to support it. Or it won’t, in which case AI-driven storage hardware and software sales will be much lower. The message from this is: don’t move away from your non-AI customer base and technology just yet. Hedge your bets, storage people.